Evaluating MariaDB on openEuler-ARM stack

openEuler is an opensource linux based operating system with a customized scheduler, io, libraries, etc.. It is optimized for ARM64 architecture and so it could be interesting to evaluate different enterprise software running on the openEuler-ARM stack. As part of this study, let’s explore how MariaDB that already has packages on ARM for different operating systems performs on openEuler.

Setup

Given that openEuler is optimized for ARM let’s try to evaluate it against ubuntu-arm stack (proven configuration).

- Machine Configuration (from huaweicloud)

- openEuler-on-arm: 24 vCPU - 48 GB (Kunpeng 920 2.6 Ghz)

- ubuntu-on-arm: 24 vCPU - 48 GB (Kunpeng 920 2.6 Ghz)

- Workload:

- sysbench: point-select, read-only, update-index, update-non-index

- Other configuration details here

- data-size: 35GB, buffer-pool: 40GB (all-in-memory)/20 GB (part of data in memory)

- redo-log-size: 10 GB

- storage: EVS volume with 22K IOPS (read/write mixed).

- MariaDB Version: 10.8 3 (GA).

- OS Version (mostly with default settings)

- Ubuntu: 18.04.3 LTS

- openEuler: 20.03 LTS-SP2

Benchmarking

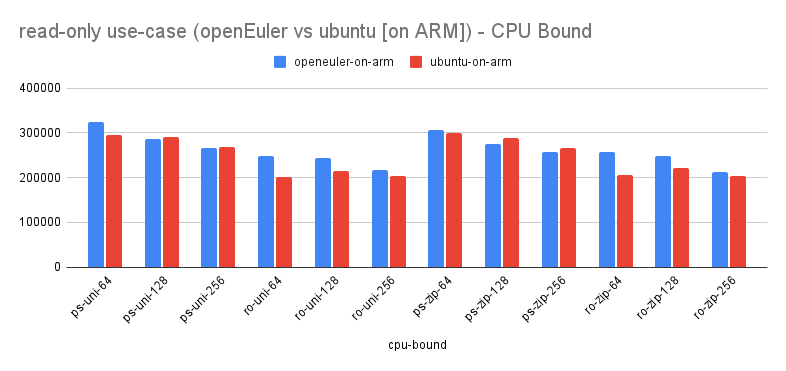

Read Only Workload

Let’s start with read-only workload. sysbench offers 2 read-only workloads: point-select, and read-only.

Observations:

- MariaDB on openEuler continues to score for all kinds of scenarios (uniform, zipfian) with improvement crossing 25% in some cases.

- Performance Profiling pointed out the following facts

- openEuler is better at handling contention (reported lesser contention).

- memcpy seems to be optimized in openEuler given it doesn’t show up in top-5 perf hot function list.

- performance schema profiling too reconfirmed a significant reduction in LOCK_table_cache contention.

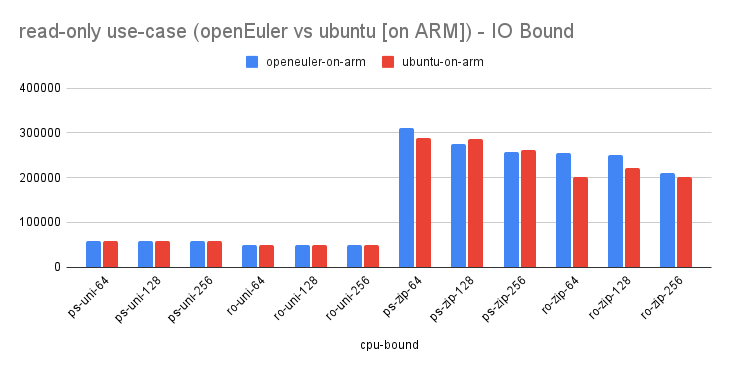

Now let’s test the io-bound use-case for read-only workload

Observations:

- Depending on workload either the performance of openEuler is on-par for lesser contention workload viz. uniform or better for higher contention workload viz. zipfian.

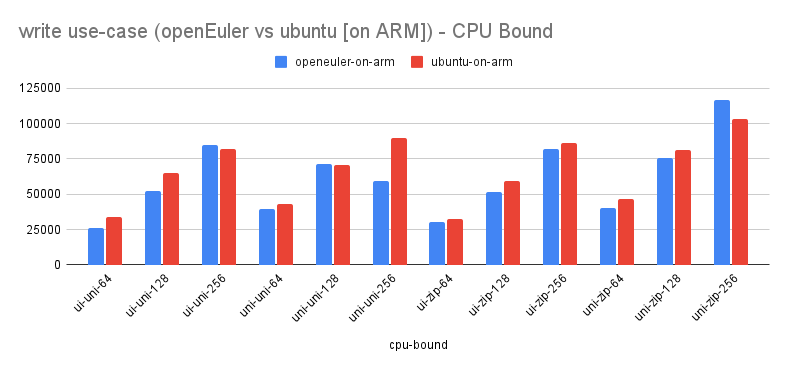

Write Workload

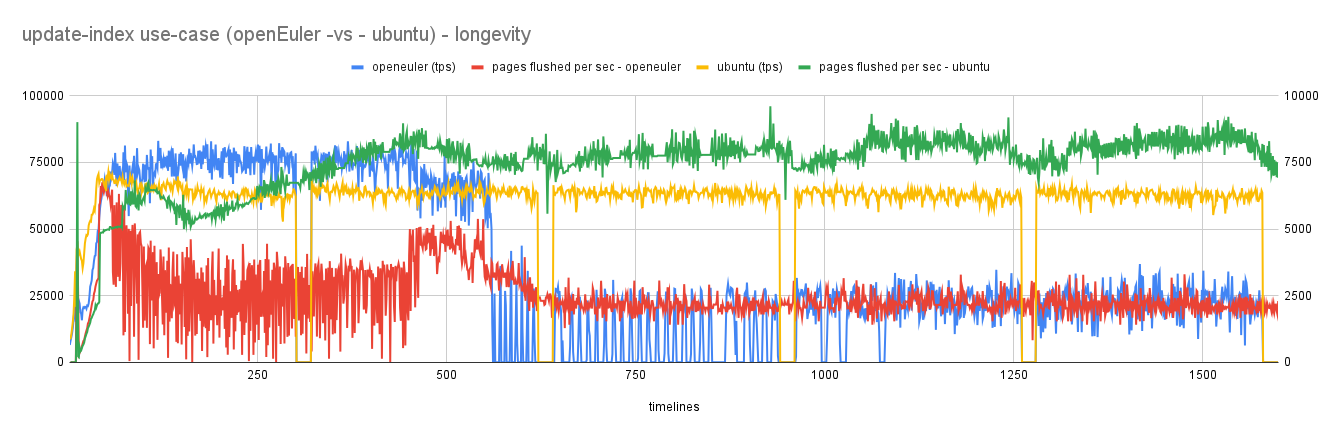

Let’s start with write workload. sysbench offers 2 write workloads: update-index, update-non-index

Observations:

- With write workload, openeuler has mixed performance. Especially, with uniform workload openeuler continue to lag but start scoring with zipfian use-cases.

- To understand this lag better we tried multiple studies and eventually found out that flushing of pages is not consistent with openeuler there-by increasing the checkpoint age and in-turn causing the lag.

- The extended run shows a clear picture. openEuler continues to perform better than ubuntu during an initial run but then starts losing steam due to inconsistent/below par page flushing.

- Performance profiling reveals a significant increase in contention of rseg->latch and fil_space_latch with openeuler (both occupying top slot vs ubuntu).

openeuler

| wait/synch/rwlock/innodb/trx_rseg_latch | 18733914.8115 | 176880804 |

| wait/synch/rwlock/innodb/fil_space_latch | 6036822.3603 | 6801714 |

| wait/synch/cond/mysys/COND_timer | 1857132.6029 | 6896 |

| wait/synch/cond/aria/SERVICE_THREAD_CONTROL::COND_control | 1834108.5978 | 61 |

| wait/synch/rwlock/sql/MDL_lock::rwlock | 293917.5930 | 344366933 |

| wait/synch/mutex/innodb/flush_list_mutex | 181595.7034 | 403167359 |

| wait/synch/sxlock/innodb/index_tree_rw_lock | 101674.7901 | 194147135 |

| wait/synch/rwlock/innodb/log_latch | 58528.4128 | 302800939 |

| wait/synch/mutex/sql/LOCK_table_cache | 43197.0327 | 110726733 |

| wait/synch/mutex/innodb/buf_pool_mutex | 26246.6964 | 72853477 |

ubuntu

| wait/synch/cond/mysys/COND_timer | 1889638.3321 | 7310 |

| wait/synch/cond/aria/SERVICE_THREAD_CONTROL::COND_control | 1866600.4146 | 62 |

| wait/synch/rwlock/innodb/trx_rseg_latch | 880059.8838 | 432965780 |

| wait/synch/mutex/innodb/flush_list_mutex | 740073.9749 | 666248407 |

| wait/synch/rwlock/sql/MDL_lock::rwlock | 461974.0141 | 659530098 |

| wait/synch/rwlock/innodb/fil_space_latch | 411518.1018 | 19912041 |

| wait/synch/rwlock/innodb/log_latch | 276165.8275 | 607470128 |

| wait/synch/mutex/sql/LOCK_table_cache | 141710.7974 | 188402516 |

| wait/synch/mutex/innodb/buf_pool_mutex | 126368.0575 | 177463579 |

| wait/synch/sxlock/innodb/index_tree_rw_lock | 43622.2066 | 403046978 |

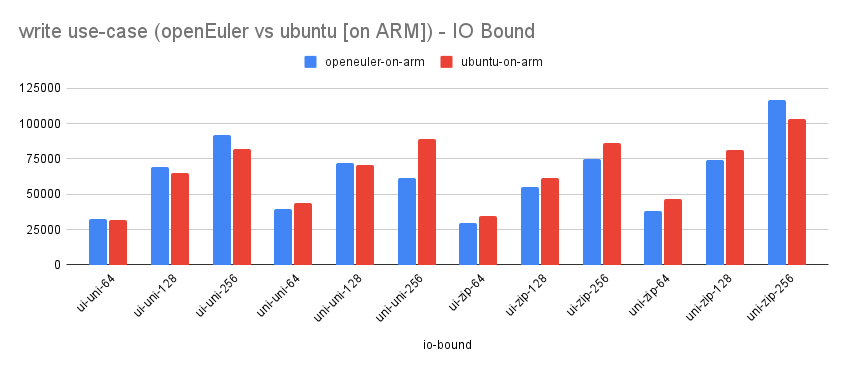

Now let’s test the io-bound use-case for write workload

Observations:

- Performance is quite similar to cpu bound use case.

Conclusion

From the study so far, openEuler on ARM looks promising. It has scored well on the read-only front and has shown mixed results for write workload. The issue of contention needs to be looked at to help understand why the said latches are prominent on openEuler and possible IO issues.

If you have more questions/queries do let me know. Will try to answer them.