MariaDB on openEuler-ARM stack - Multi-NUMA study

MariaDB on the openEuler-ARM stack has shown promising results during the initial evaluation. (If you have missed that blog click here). Taking the assessment further we decided to evaluate the setup in a multi-numa environment since it represents enterprise deployment. The aim is to find out if things scale like other OS-ARM stacks and if the bottleneck continues to remain the same or if something else pops up on the openEuler-ARM stack.

Setup

- Machine Configuration

- ARM: 96 vCPU (4 NUMA) ARM Kunpeng 920 CPU @ 2.6 Ghz (openEuler - ARM stack)

- Workload:

- sysbench: point-select, read-only, update-index, update-non-index

- Other configuration details here[ (io-capacity/max=10K/18K)]

- data-size: 74GB, buffer-pool: 80GB (all-in-memory)

- redo-log-size: 20 GB

- storage: sequential read/write IOPS: 33+K/16+K/random read/write IOPS: 28+K/14+K

- MariaDB Version: 10.10 (#88b2235 in progress)

- OS Version (mostly with default settings)

- openEuler: 20.03 LTS-SP2

Evaluation

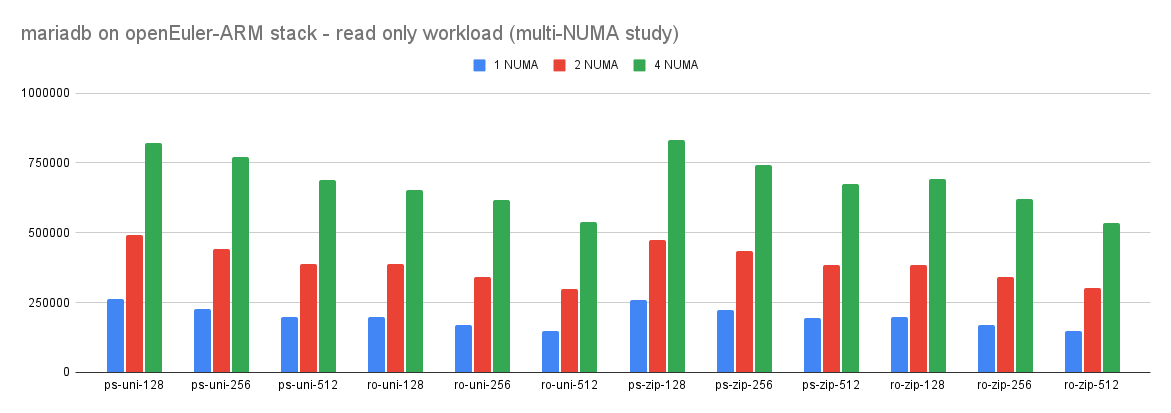

read-only workload

Observations:

- Just like other OS-ARM stacks, the read-only workload continues to scale almost linearly with the openEuler-ARM stack with increasing NUMA nodes.

- Infact, due to the lower contention of some mutexes (Lock_table_cache), the performance of the read-only workload on the openEuler-ARM stack is better than other stacks.

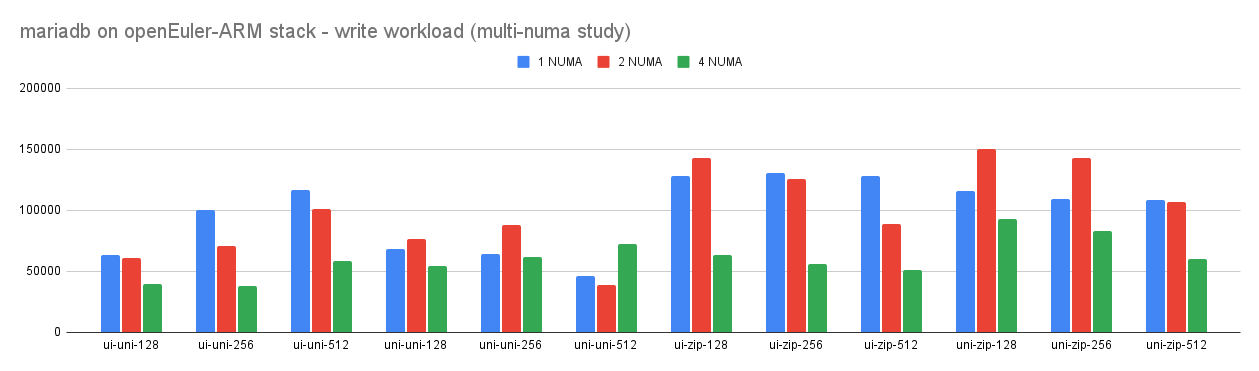

write workload

Observations:

- Again, we continue to observe the same pattern as other OS-ARM stacks. Write workload fails to scale on multi-numa nodes.

- Top-Contention points continue to remain the same.

openEuler-ARM stack

| wait/synch/rwlock/innodb/log_latch | 19169202.9823 | 199868944 |

| wait/synch/rwlock/innodb/trx_rseg_latch | 785002.5091 | 123518641 |

| wait/synch/mutex/innodb/buf_pool_mutex | 638941.9645 | 56602817 |

| wait/synch/cond/mysys/COND_timer | 402259.4476 | 1532 |

| wait/synch/cond/aria/SERVICE_THREAD_CONTROL::COND_control | 389933.2353 | 13 |

| wait/synch/rwlock/innodb/fil_space_latch | 325025.1544 | 4433912 |

| wait/synch/rwlock/sql/MDL_lock::rwlock | 311635.8508 | 236808760 |

| wait/synch/sxlock/innodb/index_tree_rw_lock | 46259.4764 | 134161482 |

| wait/synch/mutex/sql/LOCK_table_cache | 21153.7182 | 77818000 |

| wait/synch/mutex/sql/THD::LOCK_thd_data | 11876.1819 | 178734356 |

centOS-ARM stack

| wait/synch/rwlock/innodb/log_latch | 16410690.9523 | 215740728 |

| wait/synch/cond/mysys/COND_timer | 834065.5579 | 3066 |

| wait/synch/rwlock/innodb/trx_rseg_latch | 833276.8134 | 131173975 |

| wait/synch/cond/aria/SERVICE_THREAD_CONTROL::COND_control | 811668.6162 | 27 |

| wait/synch/rwlock/sql/MDL_lock::rwlock | 640257.8943 | 258275876 |

| wait/synch/mutex/innodb/buf_pool_mutex | 587507.9794 | 59236236 |

| wait/synch/rwlock/innodb/fil_space_latch | 341941.7540 | 4669815 |

| wait/synch/sxlock/innodb/index_tree_rw_lock | 64058.7856 | 145183127 |

| wait/synch/mutex/sql/LOCK_table_cache | 38422.9402 | 84390443 |

| wait/synch/mutex/sql/THD::LOCK_thd_data | 11220.5507 | 196408431 |

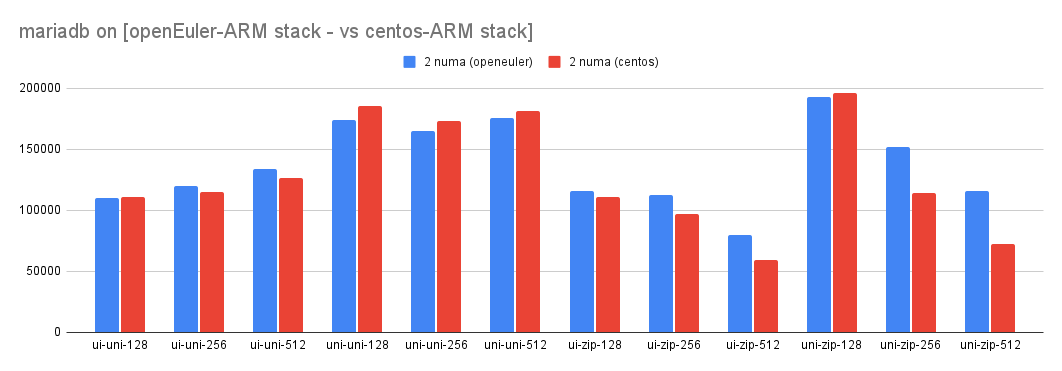

- the openEuler-ARM stack continues to perform on par with other stacks and to check that out that we did some comparative analysis against the centos-ARM stack (using ramdisk since both servers don’t have comparable IO systems).

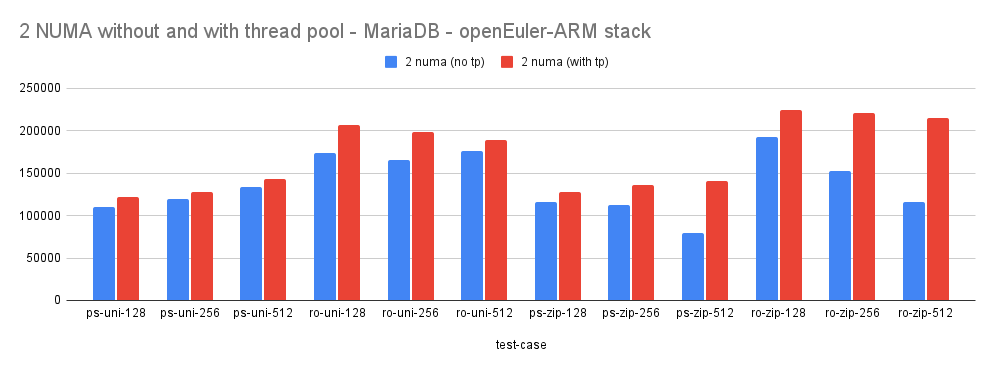

Evaluation using thread pool

OS-Level Scheduler has a significant role to play with thread handling so we also decided to evaluate the effect of the thread pool.

Observations:

- As expected, enabling thread pool has +ve effect on performance just like other OS-Hardware stacks.

Conclusion

Based on the evaluation we can comfortably say that MariaDB continues to perform on par/better on the openEuler-ARM stack (when compared to proven stacks). Given the said fact it could be worth considering supporting the openEuler-ARM stack.

If you have more questions/queries do let me know. Will try to answer them.