MariaDB threadpool and NUMA scalability

MariaDB/MySQL default uses one thread per connection. This approach is generally good if the connection is active for a longer time. If the connections are short-lived then the cost of creating a connection could overshadow the cost of running the query. Also, with increasing scalability, OS-scheduling introduces more jitter. In this case, threadpool could act as a good alternative.

In this article, we will explore the effect of the thread pool with growing NUMA scalability.

Threadpool

MariaDB support dynamic threads pooling allowing it to scale up/down the number of thread groups as needed. Each thread group has 2 threads: listener and worker. Worker thread count could further increase if the stall is detected. You can read more details here but let’s try to understand important parameters that we plan to use/experiment with.

Configuration:

thread_handling=pool-of-threads (to enable thread pool).

thread_pool_size=X (number of threads group that determines how many statements execute in parallel).

thread_pool_idle_timeout=1 (number of seconds after which idle thread should exit if there is no work).

show status like 'Threadpool%';

+-------------------------+-------+

| Variable_name | Value |

+-------------------------+-------+

| Threadpool_idle_threads | 201 |

| Threadpool_threads | 202 |

+-------------------------+-------+

2 rows in set (0.001 sec)

Setup

- Machine Configuration:

- ARM: 128 vCPU (4 NUMA/2 Socket) ARM Kunpeng 920 CPU @ 2.6 Ghz

- Workload (using sysbench):

- CPU bound workload: Pattern: uniform, zipfian

- Other configuration details here (+ skip-log-bin).

- Buffer Pool: 80GB

- Data: 70GB

- Redo-log: 20 GB

- Configuration details: click here [+ skip-log-bin]

- Storage: NvME SSD

- sequential read/write IOPS: 190+K/125+K

- random read/write IOPS: 180+K/65+K

- MariaDB Version: 10.6.5 (tagged GA)

- Scalability: 512-1024 threads

- 28/56/112 threads for server

- 4/8/16 threads for sysbench

- Scalability: 512-1024 threads

Benchmarking

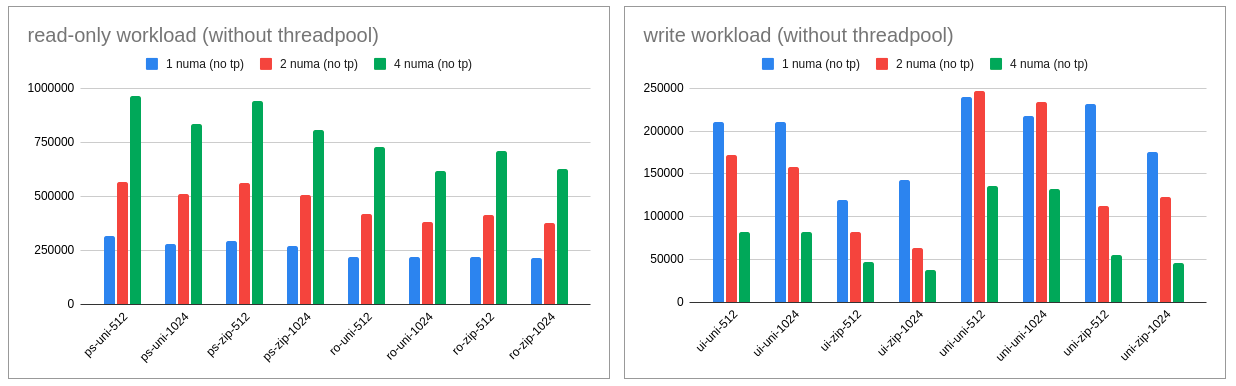

Without Thread Pool (default)

Observations

- For read-only workload, with increasing scalability (in form of NUMA) throughput continues to grow linearly.

- For write workload, with increasing scalability (in form of NUMA) throughput drops. (why? Check this arcticle)

Now let’s enable threadpool and see if this effect could be reversed or limited as threadpool could help eliminate the OS-scheduling jitter.

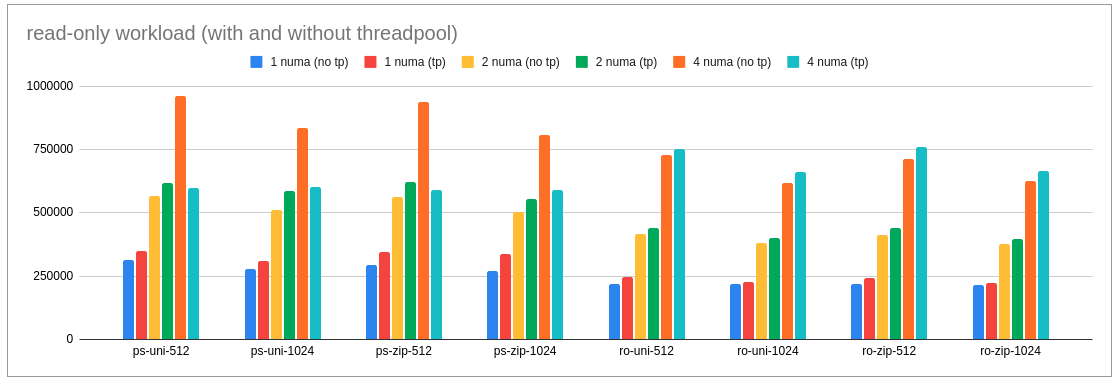

With Thread Pool (thread_handling=pool-of-threads)

Observations

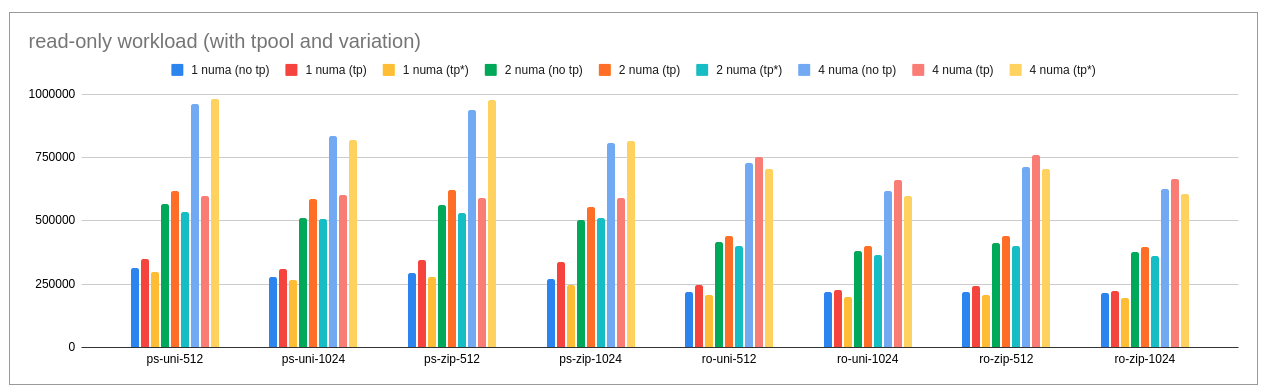

- read-only workload:

- Enabling threadpool helps improve the performance in a range of 10-20% for the majority of the scenarios except for 4 NUMA point-select use-case.

- For 4 NUMA point select, there is a regression in performance (30-40%) with thread pool enabled.

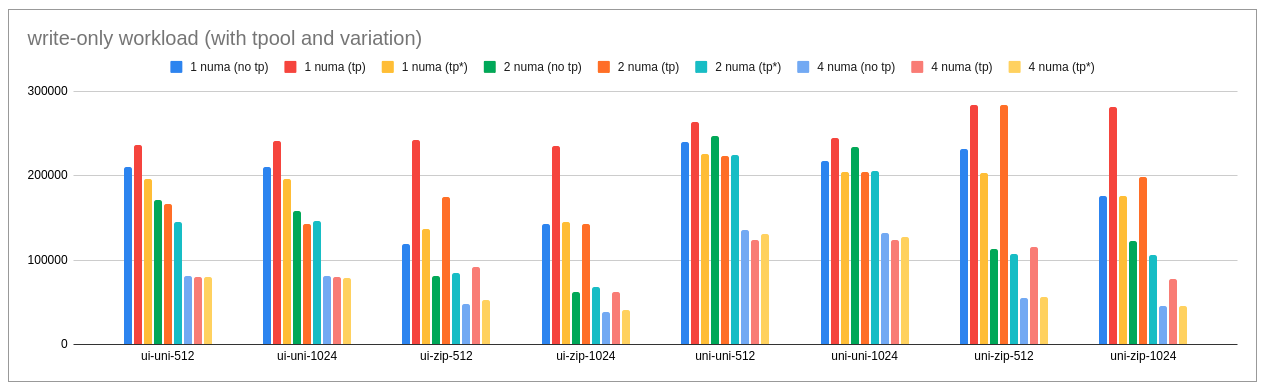

- write workload:

- Enabling threadpool helps improve the write performance especially with the high contention use-case. Infact, in some cases, improvement is up to 100% that is 2x performance.

- For the non-contention use-case, the performance of 4 NUMA threadpool is either on par or marginally less in some cases.

So we have mixed results with the majority of the cases seeing good benefit with the use of thread pool. The main cause of concern is the 4 NUMA point-select that have shown serious regression. Performance Profiling revealed that the issue is related to memory allocation in lock-free-hash due to the limited number of threads with higher throughput (1 million).

+ 62.41% 550335 mysqld mariadbd [.] lf_alloc_new

Threadpool default configuration boots 112 threads (based on a number of cores allotted to the server) threads. Limited threads serving 1024 scalability introduce the NUMA allocation challenge.

This prompted us to increase the base number of thread groups from default to 1024+

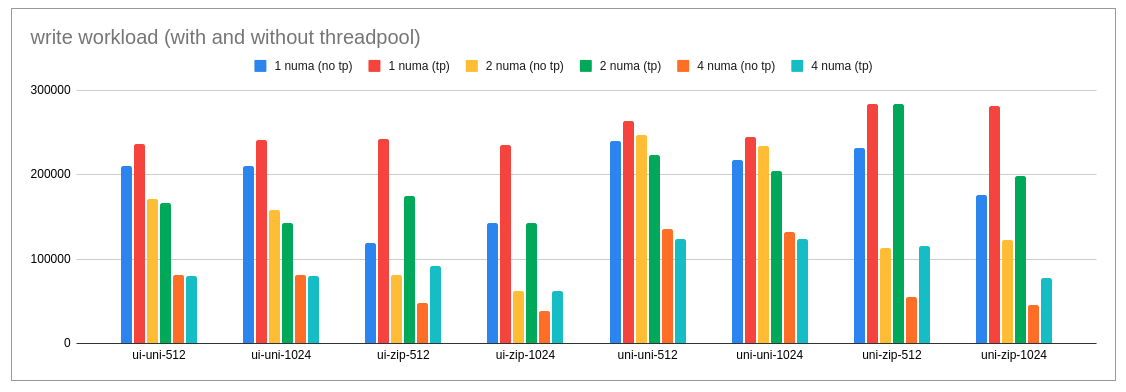

With Thread Pool (thread_handling=pool-of-threads, thread_pool_size=1500, thread_pool_idle_timeout=1)

Setting thread_pool_size > 1024 is pretty clear but then why we need to set thread_pool_idle_timeout. This will ensure that if the thread is not serving any query or workload for more than 1 sec it is released and doesn’t hog the resources. Especially important when we try workload < 1024 threads (like 512 use-cases). (default of thread_pool_idle_timeout=60 sec which is too high for short workloads).

Note: legend tpool* refer to the above configuration

Observations

- read-only workload:

- So it helped 4 NUMA point-select use-case but made other scenarios worse or comparable to the w/o threadpool variant.

- write workload:

- So additional fine-tuning doesn’t help.

That suggests depending on the workload user should tune the thread-pool parameters but enabling threadpool for system-wide usage seems to be a good idea including NUMA scalability.

Conclusion

Enabling thread pool continue to help in most of the scenarios (with a significant gain observed in high contention scenario) but increasing NUMA scalability seems to pose a challenge that needs some more attention to help resolve the LF_HASH allocation issue.

If you have more questions/queries do let me know. Will try to answer them.